Overview

A good draft is a big part of doing well in Fantasy Football. Every year, Fantasy Football pundits write droves of articles on “sleeper” picks, or players that aren’t on most people’s draft sheets. Many of these players are untested rookies drafted straight from college. So how do you know differentiate which rookies will remain sleepers from those who will propel your fantasy team to victory? One place to start is the NFL combine.

Indeed, I’m not the first person to investigate the relationship between combine stats/physical attributes and player performance. NFL teams do it every season, as well as fellow data nerds (see here). However, prior analyses have focused on performance over a longer period of time, such as the first three years in the league. This makes sense from the perspective of an NFL team, as rookies are typically signed for longer than a one-year contract. In contrast, most fantasy football leagues operate like one-year contracts; you draft a new team at the beginning of each year. Thus, in the case of NFL rookies, I’m interested exclusively in first year NFL performance. Can we learn anything from the combine that isn’t already baked into a rookie’s draft position?

NFL Combine Performance

The NFL combine is like the SAT or ACT except for football, with more lifting and less clothing. It’s a chance for NFL teams to see how fast and strong NFL hopefuls are in a controlled environment. During the combine players typically complete the following events:

- 40 yard dash

- Number of reps of 225lbs on the bench press (my number is 0)

- How high someone can jump (vertical leap) How far someone can jump (broad jump)

- Basically run back and forth between two lines as fast as possible (shuttle)

- Again more running between cones (3cone)

In addition to these drills, height and weight are also measured. This data gives NFL team’s a holistic perspective to evaluate players. Despite a number of findings indicating the limited utility of this information translating into actual performance in the NFL, the difference between a 4.4 second and 4.6 second 40 yard dash can mean big differences in draft position.

Draft Position

Most rookies in the NFL enter a team through the draft. A player’s draft position is a reasonable proxy for their perceived value. If a rookie is believed to be a game-changer, then they’ll have a low draft position; if they have some skills but need a little more work, then they’ll have a higher draft position. Indeed, rookie pay is directly correlated with draft position, such that players drafted in earlier rounds are paid more money than those drafted later. Thus draft position should relate to first-year performance, otherwise teams would just choose players at random, hoping to land the next Tom Brady or Julio Jones based on the phases of the moon or their horoscopes.

First Year Performance

I adopted a common scoring system from most standard fantasy football leagues when quantifying first-year performance:

- Touchdowns: 5 points

- Every 10 receiving yards: 1 point

- Every 10 rushing yards: 1 point

- Every fumble: -2 points

For example, let’s say you draft a running back and he scores five touchdowns, rushes for 500 yards, and fumbles the ball twice during his rookie season. The points for this player would be (5 * 5) + (500 * 0.1) + (2 * -2) = 71 points. Additionally, 50 rushing or receiving yards is considered equivalent to one touchdown.

Collecting Combine and Draft Data

The first thing we’ll do is pull combine results and draft position data for all rookies in the NFL from 2011-2016. The rvest package will do the heavy lifting.

libs = c('rvest', 'dplyr', 'janitor',

'GGally', 'zeallot', 'mgcv',

'knitr', 'kableExtra', 'readr')

lapply(libs, require, character.only = TRUE)

years = seq(2011, 2016)

combine_data = data.frame(NULL)

draft_position_data = data.frame(NULL)for(y in years){

print(paste0("COLLECTING DATA FROM ", y))

combine_url = paste0('http://nflcombineresults.com/nflcombinedata.php?year=',

y,

'&pos=&college=')

# collect combine data

yearly_combine_data = combine_url %>%

read_html() %>%

html_nodes(xpath = '//*[@id="datatable"]/table') %>%

html_table(fill = TRUE, header = TRUE) %>%

as.data.frame()

combine_data = bind_rows(combine_data,

yearly_combine_data)

draft_position_url = paste0('http://www.drafthistory.com/index.php/years/',

y)

# collect draft position data

yearly_draft_data = draft_position_url %>%

read_html() %>%

html_nodes(xpath = '//*[@id="main"]/table[1]') %>%

html_table(fill = TRUE, header = TRUE) %>%

as.data.frame()

names(yearly_draft_data) = yearly_draft_data[1,]

yearly_draft_data[2:nrow(yearly_draft_data),]

yearly_draft_data$year = y

draft_position_data = bind_rows(draft_position_data,

yearly_draft_data)

}Next, we’ll do a bit of data munging and then join the draft and combine data together.

draft_position_data_clean = draft_position_data %>%

filter(Player != 'Player') %>%

clean_names() %>%

select(-pick, -round, -college, -position) %>%

dplyr::rename(nfl_team = team,

pick = player)

combine_data_clean = combine_data %>%

clean_names() %>%

select(-na, -na_1)

# filter only players that are Running Backs & Wide Receivers

combine_draft_join = left_join(combine_data_clean,

draft_position_data_clean) %>%

filter(pos %in% c('RB', 'WR'))| year | name | college | pos | height_in | weight_lbs | wonderlic | x40_yard | bench_press | vert_leap_in | broad_jump_in | shuttle | x3cone | pick | nfl_team |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 2011 | Darvin Adams | Auburn | WR | 74.13 | 190 | NA | 4.56 | NA | NA | NA | 9.99 | 9.99 | NA | NA |

| 2011 | Anthony Allen | Georgia Tech | RB | 72.75 | 228 | NA | 4.56 | 24 | 41.5 | 120 | 4.06 | 6.79 | 225 | Ravens |

| 2011 | Armando Allen | Notre Dame | RB | 68.25 | 199 | NA | 4.52 | 23 | NA | NA | 9.99 | 9.99 | NA | NA |

| 2011 | Matt Asiata | Utah | RB | 71.00 | 229 | NA | 4.77 | 22 | 30.0 | 104 | 4.37 | 7.09 | NA | NA |

| 2011 | Jon Baldwin | Pittsburgh | WR | 76.38 | 228 | 14 | 4.49 | 20 | 42.0 | 129 | 4.34 | 7.07 | 26 | Chiefs |

| 2011 | Damien Berry | Miami (FL) | RB | 70.25 | 211 | NA | 4.58 | 23 | 33.5 | 120 | 4.12 | 7.00 | NA | NA |

| 2011 | Armon Binns | Cincinnati | WR | 75.00 | 209 | NA | 4.50 | 13 | 31.5 | 118 | 4.31 | 6.86 | NA | NA |

| 2011 | Allen Bradford | Southern California | RB | 70.88 | 242 | NA | 4.53 | 28 | 29.0 | 113 | 4.39 | 6.97 | 187 | Buccaneers |

| 2011 | DeAndre Brown | Southern Mississippi | WR | 77.63 | 233 | NA | 4.59 | 20 | 29.0 | 117 | 4.33 | 6.93 | NA | NA |

| 2011 | Vincent Brown | San Diego State | WR | 71.25 | 187 | NA | 4.68 | 12 | 33.5 | 121 | 4.25 | 6.64 | 82 | Chargers |

| 2011 | Stephen Burton | West Texas A&M | WR | 73.38 | 221 | NA | 4.50 | 19 | 34.5 | 117 | 4.31 | 7.04 | 236 | Vikings |

| 2011 | Delone Carter | Syracuse | RB | 68.63 | 222 | NA | 4.54 | 27 | 37.0 | 120 | 4.07 | 6.92 | 119 | Colts |

| 2011 | John Clay | Wisconsin | RB | 72.50 | 230 | NA | 4.83 | NA | 29.0 | 111 | 9.99 | 9.99 | NA | NA |

| 2011 | Randall Cobb | Kentucky | WR | 70.25 | 191 | NA | 4.46 | 16 | 33.5 | 115 | 4.34 | 7.08 | 64 | Packers |

| 2011 | Graig Cooper | Miami (FL) | RB | 70.00 | 205 | NA | 4.60 | 18 | NA | 114 | 4.03 | 6.66 | NA | NA |

| 2011 | Mark Dell | Michigan State | WR | 72.25 | 193 | NA | 4.54 | 14 | NA | NA | 9.99 | 9.99 | NA | NA |

| 2011 | Noel Devine | West Virginia | RB | 67.50 | 179 | NA | 4.34 | 24 | NA | NA | 9.99 | 9.99 | NA | NA |

| 2011 | Tandon Doss | Indiana | WR | 74.00 | 201 | NA | 4.56 | 14 | NA | NA | 9.99 | 9.99 | 123 | Ravens |

| 2011 | Shaun Draughn | North Carolina | RB | 70.88 | 213 | NA | 4.73 | 21 | 34.0 | 118 | 4.20 | 7.15 | NA | NA |

| 2011 | Darren Evans | Virginia Tech | RB | 72.00 | 227 | NA | 4.56 | 26 | 35.0 | 111 | 4.46 | 6.96 | NA | NA |

| 2011 | Mario Fannin | Auburn | RB | 70.38 | 231 | NA | 4.37 | 21 | 37.5 | 115 | 4.21 | 6.99 | NA | NA |

| 2011 | Edmond Gates | Abilene Christian (TX) | WR | 71.75 | 192 | NA | 4.31 | 16 | 40.0 | 131 | 9.99 | 9.99 | 111 | Dolphins |

| 2011 | A.J. Green | Georgia | WR | 75.63 | 211 | 10 | 4.48 | 18 | 34.5 | 126 | 4.21 | 6.91 | 4 | Bengals |

| 2011 | Alex Green | Hawaii | RB | 72.25 | 225 | NA | 4.45 | 20 | 34.0 | 114 | 4.15 | 6.91 | 96 | Packers |

| 2011 | Tori Gurley | South Carolina | WR | 76.13 | 216 | NA | 4.53 | 15 | 33.5 | 118 | 4.25 | 7.05 | NA | NA |

Overall looks good. One thing you’ll notice is that a lot of the players who participated in the combine don’t have a draft pick, which means that they were never drafted by a team. While there are many examples of undrafted players going on to have stellar rookie careers, these guys don’t show up much during the fantasy draft process. Thus any player without a draft pick is considered in the following analyses.

Collecting Rookie Performance Data

Next, we’ll collect the outcome variable – how many points each player scored during their rookie season - and enlist the service of the nflgame python package. We’ll write out the players and their rookie years to the rookies.csv file, pull that data into python, collect the first year stats, and then pull the output back into R.

input_file_name = "rookie_names_years.csv"

python_script_name = "collect_rookie_stats.py"

output_file_name = "year_1_rookie_stats.csv"

combine_draft_join %>%

select(year, name) %>%

write.csv(input_file_name,

row.names = FALSE)

exe_pyscript_command = paste0("//anaconda/bin/python ",

python_script_name,

" ",

"'", input_file_name, "'",

" ",

"'", output_file_name, "'"

)

print(exe_pyscript_command)We’ll execute the collect_rookie_stats.py script from within R, then read the rookie_stats.csv file back into R.

system(exe_pyscript_command)import sys

import pandas as pd

import nflgame

from pandasql import *

def collect_rushing_stats(year, week, players):

rushing_stats = list()

for p in players.rushing():

rushing_stats.append([year,

week,

" ".join(str(p.player).split(" ")[:2]),

p.rushing_tds,

p.rushing_yds,

p.fumbles_lost])

rushing_df = pd.DataFrame(rushing_stats)

rushing_df.columns = ['year',

'week',

'name',

'rushing_tds',

'rushing_yds',

'rushing_fb']

return(rushing_df)

def convert_rushing_pts(td_pts, rushing_pts, fb_pts):

return(pysqldf("""

SELECT year,

name,

td_pts + rushing_pts + fb_pts AS total_pts

FROM

(SELECT year,

name,

SUM(rushing_tds) * {td_pts} AS td_pts,

SUM(rushing_yds) * {rushing_pts} AS rushing_pts,

SUM(rushing_fb) * {fb_pts} AS fb_pts

FROM rb_df_temp

GROUP BY year, name)

NATURAL JOIN

rookie_df

""".format(td_pts = td_pts,

rushing_pts = rushing_pts,

fb_pts = fb_pts

)))

def collect_receiving_stats(year, week, players):

receiving_stats = list()

for p in players.receiving():

receiving_stats.append([year,

week,

" ".join(str(p.player).split(" ")[:2]),

p.receiving_tds,

p.receiving_yds,

p.fumbles_lost])

receiving_df = pd.DataFrame(receiving_stats)

receiving_df.columns = ['year',

'week',

'name',

'receiving_tds',

'receiving_yds',

'receiving_fb']

return(receiving_df)

def convert_receiving_pts(td_pts, receiving_pts, fb_pts):

return(pysqldf("""

SELECT year,

name,

td_pts + receiving_pts + fb_pts AS total_pts

FROM

(SELECT year,

name,

SUM(receiving_tds) * {td_pts} AS td_pts,

SUM(receiving_yds) * {receiving_pts} AS receiving_pts,

SUM(receiving_fb) * {fb_pts} AS fb_pts

FROM wr_df_temp

GROUP BY year, name)

NATURAL JOIN

rookie_df

""".format(td_pts = td_pts,

receiving_pts = receiving_pts,

fb_pts = fb_pts

)))

def main(input_file_path, output_file_path):

# define scoring scheme, years, & weeks here

td_pts = 5

receiving_pts = rushing_pts = 0.1

fb_pts = -2

game_years = range(2011, 2017)

game_weeks = range(1, 17)

# input file

global rookie_df

rookie_df = pd.read_csv(input_file_path)

# store stats for each year in player_stats_df

global player_stats_df

player_stats_df = pd.DataFrame()

for year in game_years:

print("Processing Game Data From {year}".format(year = year))

temp_df = rookie_df[rookie_df['year'] == year]

global rb_df_temp

rb_df_temp = pd.DataFrame()

global wr_df_temp

wr_df_temp = pd.DataFrame()

for week in game_weeks:

games = nflgame.games(year, week)

players = nflgame.combine_game_stats(games)

rb_df_temp = rb_df_temp.append(collect_rushing_stats(year,

week,

players))

wr_df_temp = wr_df_temp.append(collect_receiving_stats(year,

week,

players))

print 'calculating running back points'

player_stats_df = player_stats_df.append(convert_rushing_pts(td_pts,

rushing_pts,

fb_pts,

))

print 'calculating wide receiver points'

player_stats_df = player_stats_df.append(convert_receiving_pts(td_pts,

receiving_pts,

fb_pts

))

# aggregate rookies that have both receiving and running stats

stats_final_df = pysqldf("""

SELECT year, name, SUM(total_pts) as total_pts

FROM player_stats_df

GROUP BY year, name

""")

stats_final_df.to_csv(output_file_path, index = False)

if __name__ == "__main__":

pysqldf = lambda q: sqldf(q, globals())

main(sys.argv[1], sys.argv[2])

Below we’ll read the output from the collect_rookie_stats.py script back into R and examine the top 10 rows. We’ll also apply a few filters. First, all non-draft picks are eliminated from consideration. We want to control for draft pick, given that our central question is whether we can explain variation in first-year performance from information other than draft pick. Second, all rookies with less than 10 points (an arbitrary cutoff) during their rookie season are eliminated. This is to remove some of the noise that is unrelated to performance (e.g., low points due to injury and not poor performance).

rookie_stats = read_csv(file.path(working_directory, output_file_name)) %>%

inner_join(combine_draft_join) %>%

filter(pos %in% c("WR", "RB") &

is.na(pick) == FALSE &

total_pts >= 10) %>%

mutate(pick = as.numeric(pick),

pos = as.factor(pos)) %>%

select(year, name, pos, pick,

height_in, weight_lbs, x40_yard,

bench_press, vert_leap_in, total_pts

) %>%

data.frame()| year | name | pos | pick | height_in | weight_lbs | x40_yard | bench_press | vert_leap_in | total_pts |

|---|---|---|---|---|---|---|---|---|---|

| 2011 | A.J. Green | WR | 4 | 75.63 | 211 | 4.48 | 18 | 34.5 | 143.4 |

| 2011 | Austin Pettis | WR | 78 | 74.63 | 209 | 4.56 | 14 | 33.5 | 25.0 |

| 2011 | Daniel Thomas | RB | 62 | 72.25 | 230 | 4.63 | 21 | NA | 62.3 |

| 2011 | Delone Carter | RB | 119 | 68.63 | 222 | 4.54 | 27 | 37.0 | 42.4 |

| 2011 | Denarius Moore | WR | 148 | 71.63 | 194 | 4.43 | 13 | 36.0 | 87.5 |

| 2011 | Evan Royster | RB | 177 | 71.63 | 212 | 4.65 | 20 | 34.0 | 23.9 |

| 2011 | Greg Little | WR | 59 | 74.50 | 231 | 4.51 | 27 | 40.5 | 82.4 |

| 2011 | Greg Salas | WR | 112 | 73.13 | 210 | 4.53 | 15 | 37.0 | 25.2 |

| 2011 | Jacquizz Rodgers | RB | 145 | 65.88 | 196 | 4.59 | NA | 33.0 | 41.4 |

| 2011 | Jeremy Kerley | WR | 153 | 69.50 | 189 | 4.56 | 16 | 34.5 | 26.5 |

| 2011 | Jon Baldwin | WR | 26 | 76.38 | 228 | 4.49 | 20 | 42.0 | 27.7 |

| 2011 | Julio Jones | WR | 6 | 74.75 | 220 | 4.34 | 17 | 38.5 | 121.0 |

| 2011 | Kendall Hunter | RB | 115 | 67.25 | 199 | 4.46 | 24 | 35.0 | 68.1 |

| 2011 | Leonard Hankerson | WR | 79 | 73.50 | 209 | 4.40 | 14 | 36.0 | 16.3 |

| 2011 | Mark Ingram | RB | 28 | 69.13 | 215 | 4.62 | 21 | 31.5 | 75.0 |

| 2011 | Randall Cobb | WR | 64 | 70.25 | 191 | 4.46 | 16 | 33.5 | 35.0 |

| 2011 | Roy Helu | RB | 105 | 71.50 | 219 | 4.40 | 11 | 36.5 | 98.6 |

| 2011 | Shane Vereen | RB | 56 | 70.25 | 210 | 4.49 | 31 | 34.0 | 10.7 |

| 2011 | Stevan Ridley | RB | 73 | 71.25 | 225 | 4.65 | 18 | 36.0 | 42.5 |

| 2011 | Titus Young | WR | 44 | 71.38 | 174 | 4.43 | NA | NA | 79.8 |

| 2011 | Torrey Smith | WR | 58 | 72.88 | 204 | 4.41 | 19 | 41.0 | 119.7 |

| 2011 | Vincent Brown | WR | 82 | 71.25 | 187 | 4.68 | 12 | 33.5 | 42.9 |

| 2012 | Alfred Morris | RB | 173 | 69.88 | 219 | 4.63 | 16 | 35.5 | 189.1 |

| 2012 | Alshon Jeffery | WR | 45 | 74.88 | 216 | 4.48 | NA | NA | 44.1 |

| 2012 | Bernard Pierce | RB | 84 | 72.25 | 218 | 4.45 | 17 | 36.5 | 53.6 |

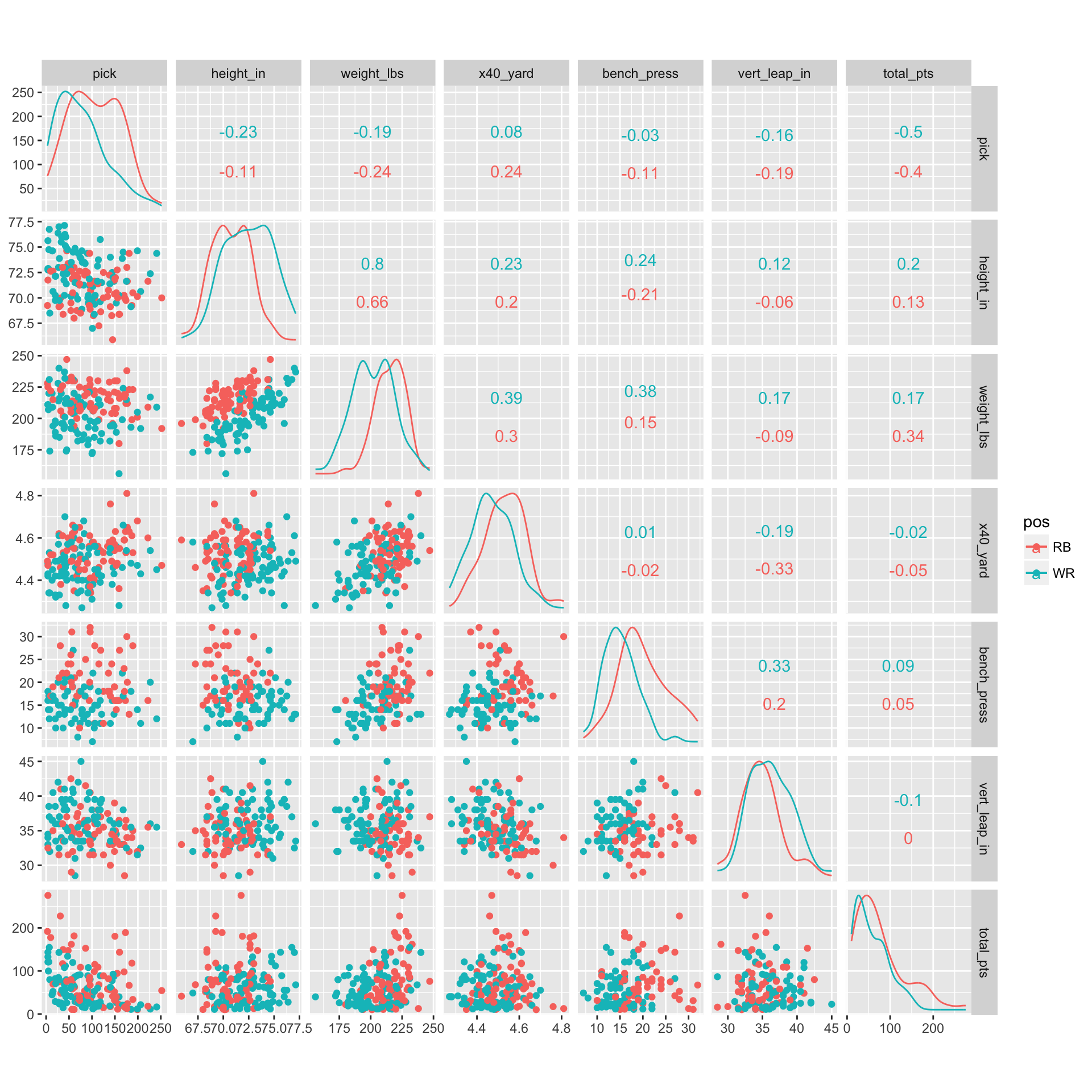

We now have all of the necessary data. We’ll visualize the bivariate relationships between our variables, segmented by position (Running Back or Wide Receiver) for a quick quality check.

g = ggscatmat(rookie_stats,

columns = 4:dim(rookie_stats)[2],

color = "pos")

plot(g) Everything looks good except for the 40 yard dash field. Most players fall in the 4-6 range except a few. Let’s examine observations with a 40 yard dash time greater than six seconds.

Everything looks good except for the 40 yard dash field. Most players fall in the 4-6 range except a few. Let’s examine observations with a 40 yard dash time greater than six seconds.

| year | name | pos | pick | height_in | weight_lbs | x40_yard | bench_press | vert_leap_in | total_pts |

|---|---|---|---|---|---|---|---|---|---|

| 2016 | Corey Coleman | WR | 15 | 70.63 | 194 | 9.99 | 17 | 40.5 | 52.4 |

| 2016 | Devontae Booker | RB | 136 | 70.75 | 219 | 9.99 | 22 | NA | 81.8 |

| 2016 | Jonathan Williams | RB | 156 | 70.00 | 220 | 9.99 | 16 | NA | 11.3 |

| 2016 | Jordan Howard | RB | 150 | 71.88 | 230 | 9.99 | 16 | 34.0 | 180.6 |

It looks like missing or invalid 40 yard dash times were coded with 9.99. Let’s sub these values with NAs and re-run the plot above.

rookie_stats$x40_yard = ifelse(rookie_stats$x40_yard == 9.99,

NA,

rookie_stats$x40_yard)

g = ggscatmat(rookie_stats,

columns = 4:dim(rookie_stats)[2],

color = "pos")

plot(g)

Ahh that’s better. Now we’re ready to do some analyses.

Explaining Rookie Performance

Based on the scatterplot matrix, draft pick explains a fair amount of variability in first-year fantasy points. Teams shell out millions of dollars for rookie players, so it’s not surprising that points and pick number are inversely related, such that lower picks (1, 2, 3) score more fantasy points during their first season than higher picks. The other variable that exhibits a relationship with points is weight, which is moderated by position. Running Backs (RBs) appear to benefit more from having a few extra pounds relative to Wide Receivers (WRs). Being able to punch the ball in from the 1-yard line means more touchdowns. Having a more robust build might translate into fewer injuries and missed games. And being heavier would make an RB harder to tackle, which means more running yards. Having extra weight is less beneficial to a WR, as they rely on their speed and agility to create separation from defenders. Indeed, heavier players run slower 40-yard dash times, and I haven’t seen too many successful WRs in the NFL who were slow. This suggests that given the option between a lighter or heavier RB, go with the big guy.

Teams do seem to factor the weight of an RB into their draft strategy, as heavier RBs are drafted earlier on. But the question is whether weight is still significant after controlling for draft pick number and accounting for position (WR vs. RB). I’ll apply my two favorite modeling approaches when interpretation is paramount: Linear Model (LM) and General Additive Model (GAM). Pick number is present in both models. The LM features an interaction term to capture the disparate relationship between weight and position. The GAM model has a smoother for both weight and pick-number, and position is included as a dummy variable. For those unfamiliar with GAM, it’s a fantastic approach for modeling non-linear relationships while maintaining a highly interpretable model. The one drawback is that it is Additive and thus doesn’t account for interactions (of course you can add interactions terms into the model, but then it’s no longer a GAM). We’ll use LOOCV (leave-one-out-cross-validation) to determine which method of describing the data generating process is most generalizable.

# function for cross validation

split_data = function(input_df, pct_train){

set.seed(1)

data_list = list()

random_index = sample(1:nrow(input_df), nrow(input_df))

train_index = random_index[1:(floor(nrow(input_df) * pct_train))]

data_list[['train_df']] = input_df[train_index,]

data_list[['test_df']] = input_df[setdiff(random_index, train_index) ,]

return(data_list)

}

input_df = rookie_stats

pct_train = 0.8

fdata = split_data(input_df, pct_train)

row_index = sample(1:nrow(fdata$train_df), nrow(fdata$train_df))

# empty vectors to store prediction errors during LOOCV

lm_pred = c()

gam_pred = c()

for(i in row_index){

# training data

temp_train = fdata$train_df[setdiff(row_index, i),]

# validation datum

temp_validation = fdata$train_df[i,]

# linear model

temp_fit_lm = lm(total_pts ~ weight_lbs * pos + pick,

data = temp_train)

# GAM model

temp_fit_gam = mgcv::gam(total_pts ~ s(weight_lbs) + s(pick) + pos,

data = temp_train)

# linear model prediction

lm_pred = c(lm_pred,

predict(temp_fit_lm, temp_validation))

# GAM model prediction

gam_pred = c(gam_pred,

predict(temp_fit_gam, temp_validation))

}Let’s consider two different measures of performance.

r_squared = function(actual, predicted){

return(1 - (sum((actual-predicted )^2)/sum((actual-mean(actual))^2)))

}

mdn_abs_error = function(actual, predicted){

return(median(abs(actual-predicted)))

}

val_actual = fdata$train_df$total_pts[row_index]

val_perf = data.frame(model = c("LM", "GAM"),

# calculate r-squared

r_squared = c(r_squared(val_actual,lm_pred),

r_squared(val_actual,gam_pred)),

# calculate MAE

median_abs_err = c(mdn_abs_error(val_actual, lm_pred),

mdn_abs_error(val_actual, gam_pred))) %>%

mutate_if(is.numeric, round, 2)

print(val_perf) ## model r_squared median_abs_err

## 1 LM 0.17 26.15

## 2 GAM 0.17 27.08The LM performed similiarly to the GAM, so we’ll use the simpler, LM model on the entire training set and examine the coefficients.

train_fit = lm(total_pts ~ weight_lbs * pos + pick,

data = fdata$train_df)

summary(train_fit)## Call:

## lm(formula = total_pts ~ weight_lbs * pos + pick, data = fdata$train_df)

##

## Residuals:

## Min 1Q Median 3Q Max

## -70.946 -27.584 -9.839 17.944 157.722

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -176.63257 101.66213 -1.737 0.08490 .

## weight_lbs 1.31363 0.46120 2.848 0.00518 **

## posWR 235.87977 119.20973 1.979 0.05016 .

## pick -0.31399 0.07396 -4.245 4.35e-05 ***

## weight_lbs:posWR -1.21318 0.56198 -2.159 0.03288 *

## ---

## Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

##

## Residual standard error: 43.19 on 119 degrees of freedom

## Multiple R-squared: 0.2424, Adjusted R-squared: 0.217

## F-statistic: 9.52 on 4 and 119 DF, p-value: 1.033e-06Interestingly, even after controlling for pick number, the interaction term is still significant. Interpreting interactions terms can be tricky, and I’ve found it helpful to just plug in a range of numbers for the variable you’re interested in and hold everything else constant to see how the predicted value changes. So that’s what we’ll do here. We’ll hold pick number at the mean, enter “RB” for the position dummy variable, and then generate a sequence between the 5th and 95th percentiles of weight. The slope of our predicted value indicates how many additional points we expect to score during a season for each RB pound.

# select 5th and 95th percentiles for weight

# hold pick number and position constant

q5_95 = unname(quantile(fdata$train_df$weight_lbs, c(0.05, 0.95)))

sim_df = data.frame(weight_lbs = seq(q5_95[1], q5_95[2], 1),

pick = mean(fdata$train_df$pick),

pos = "RB"

)

sim_df$predicted_pts = predict(train_fit, sim_df)

print(diff(sim_df$predicted_pts)[1])## [1] 1.313628As the weight of an RB increases by one pound, we expect the total number of fantasy points scored in a season to increase by ~1.3. Thus if you had two RBs drafted around the same position, but one weighed 30lbs more than the other, you would expect 39 more points from the heavier RB. Over a 16 game season, that difference translates to 2.4 more points per game, which could be the difference between making or missing the playoffs.

90 percent of weight values fall between 180 and 230, so I’d feel comfortable generalizing these findings to all RBs within this range. The data is pretty sparse outside of this range, so the relationships in the model would likely break down Indeed, I’m fairly certain a 320lb RB would not score more points than a 220lb RB; there is some point where weight likely has a negative effect on performance, but we won’t see that in the data because a 320lb RB won’t ever see the field.

While these findings are interesting, it’s not clear how helpful the model is in terms of informing our draft strategy. To answer that question, we’ll determine how the model performs on the hold-out set.

r_sq_test = round(r_squared(fdata$test_df$total_pts,

predict(train_fit, fdata$test_df)),

1)

median_abs_err = round(mdn_abs_error(fdata$test_df$total_pts,

predict(train_fit, fdata$test_df)),

2)

print(paste0("TEST R-SQUARED: ", r_sq_test))

print(paste0("TEST MAE: ", median_abs_err))## [1] "TEST R-SQUARED: 0.3"

## [1] "TEST MAE: 31.7"We can explain approximately 30 percent of the variance in the test set, and the median absolute error is around 30. The fact that performance on the test set is comparable with the validation set indicates good generalizability of our model. However, there is a lot of variance left to explain! If we wanted to improve these predictions, there are a number of other variables to consider, including:

- Injury

- Strength of Offensive Line

- Presence of skilled existing running back

- Ratio of pass to run plays in the prior season

These inputs have an impact on how many points a fantasy RB will score. But if we want to keep it simple, the key takeaway is this: When it’s fantasy draft time and you’re debating between a few rookie RBs drafted around the same spot, go with the big guy. It just might be the difference between fantasy glory or facing the receiving end of one of these…